Smart Recommendations Powered by Your Database

The Problem

Bookstores want to help customers discover books in a more personal, intuitive way, but traditional search only works when users already know what they're looking for. Staff can't possibly know every title, and most online recommendation systems aren't connected to what's actually available in-store.

The challenge was to explore whether AI could understand natural language requests and generate meaningful recommendations — limited strictly to books the bookstore currently has in inventory.

The Solution

We built an AI-powered search and recommendation system using the bookstore's existing inventory data. The only required input is a simple list of ISBN numbers, which is automatically enriched with public book metadata such as author, genre, descriptions, and themes.

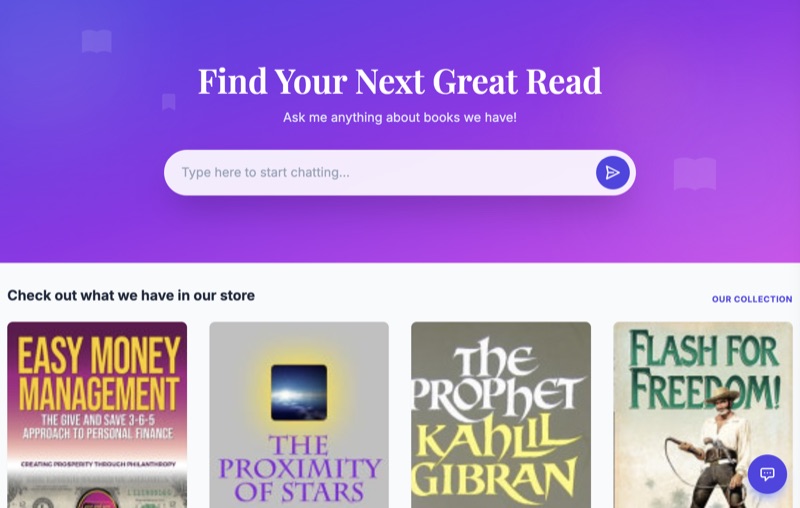

Customers can ask natural questions like "a mystery similar to Harry Potter but for adults", and the system intelligently searches the enriched inventory to recommend relevant books that are actually in stock.

To demonstrate feasibility, we created a complete example bookstore website using a mock inventory to show how the experience would work in practice.

The Result

The experiment showed that AI-powered search can dramatically improve book discovery compared to keyword-based search alone. Customers receive more relevant, context-aware recommendations, while staff are no longer required to manually guide every request.

Because the system is tied directly to live inventory, recommendations remain accurate and require minimal maintenance. The same approach can be applied to any business where users need to search large, structured datasets using natural language.